To facilitate my learning of Dutch I decided to make a project to assist me in the learning experience. I knew that I wanted to implement modern LLM APIs and to test the viability of “vibe coding” in a large project. I created a prototype using a standard js/html/css front end and a flask backend. This section can serve as an effective post-mortem for the project. Firstly, I was able to create a full and complex application without needing to write any code myself. This is thanks to the advances google has made with Gemini 3 CLI. However, I do have many issues with the structure of the code and its viability as a production product right out of the box.

Main Use of the Application

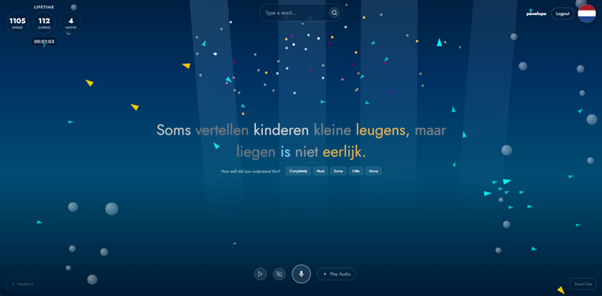

A dutch sentence will be chosen and displayed in the middle of the screen. The user is then able to click on a word that they don’t know. Then another sentence containing that word will appear. It attempts to choose sentences that have the highest comprehensibility for the user, a metric that is based on how frequently the user has seen words in the sentence. If we have no sentences in the database that contains that word, we will send a request to OpenAI’s chatgpt to generate the word.

In addition to automated sentence generation it also generates text to speech which can aid the user in understanding pronunciation. The app tracks how many times a user has seen each word, the brighter the word’s color the more well known the word is. Yellow words are words that have never been seen before. Blue words are words that have been seen more than 100 times. Additional features include a bug report feature, recording functionality so a user can record their voice own pronunciations of each word, and a rather interesting dynamic background.

Simulation & Sound

The background is a simulation of boids, which are an incredibly simplistic ai that works by following a few simple rules. Each “fish” wants to be close, but not too close, as they would be crowded. They want to go in the average direction of the fish that are surrounding them. In addition, they want to approach food and avoid sharks. These very simple behaviors result in a fish “schooling” effect.

Additionally, sound effects are implemented in the program. When fish eat food, it plays tones based on the notes of a scale and choosing specific intervals to move by. I won’t be explaining music theory here; however, this produces a quite pleasing result rather than random noise, it sounds musical in a way.

Things that went well:

- It was able to quickly create complex CSS animations with minimal friction.

- It was able to create a good backend that functioned well.

- It created database code that was protected against basic SQL injection. Though it cannot be trusted that the application is secure.

- It was able to speed up development significantly.

- It turned out to be a solid prototype that allowed me to rapidly test many features.

- It created a good UX.

Things that did not go well:

- Refactoring issues: It often struggled with refactoring code. I wanted to get a frontend framework working rather than using pure JavaScript as the complexity rapidly got out of hand. It ended up creating the frontend code on top of the existing JavaScript, leading to non-functional code buried in the program.

- Data Desynchronization: During prototyping I used local storage, but later it really struggled with moving everything to the database. It was unclear what was stored where, leading to a muddy situation that was difficult to debug.

- Managing complexity: LLMs don’t do a good job at properly engineering an application from incremental additions. The code gets very complex very quickly, and the use of LLMs encourages behavior of not looking at the code.

Conclusion

I believe that the use of LLMs can greatly increase the productivity and expression of a programmer. However, they are not a substitute for understanding software architecture and knowledge of programming in general. This application contained a lot of pitfalls that a beginner could easily overlook and cause massive problems in a production environment.

If you are going to use AI I recommend understanding software architecture, understanding best practices for handling data to prevent data loss, sensitive data exposure, and other such risks both in terms of reliability and security. One should also try to understand each function generated by the AI and to vitally ensure that you know what is happening under the hood. To do this here is an approach I would take if I were to develop enterprise grade software using an LLM:

- Keep the context of the LLM small. Only use it to generate a small quantity of a program at a time and verify everything that it creates. It can be a good idea to scaffold out functions and allow the LLM to generate those.

- If you can’t verify everything it creates, treat is as a black box that may or may not work properly. Never trust its output, do not use it without validation in places with sensitive data. That is a recipe for disaster.

- Use it to create modules that are loosely coupled with the program. Avoid having the LLM generated code tightly coupled with the program, keep it obvious what parts of the program are LLM generated, keep it separate.

- Create a technical design first or if you are simply prototyping understand that you should likely not attempt to turn it into a production application. The prototypes generated should be destructible.

- Follow and understand best practices in software development. LLMs are not a substitute for learning.

- Keep yourself accountable and understand the liability that LLMs introduce.

- Test your code extensively, the LLM can break all features during any prompt. It can easily touch unrelated parts of the program and break it. Testing code generated by an LLM must be tested more extensively than code engineered by a human.

- It’s probably best to use a strongly typed, compiled language in any production scenario. It is important to know where it will fail before it fails. And having a strongly typed compiled language will make the code easier to refactor.

Warning: before releasing any LLM generated code into a production environment ensure that your application will not cause harm to the user, through security vulnerabilities, mishandling of sensitive data, or other. LLMs can feel like a superpower but don’t let them inflate your confidence. Be diligent, be thoughtful, be safe.